Howdy! I’m a Ph.D. candidate in Statistics at Boston University, co-advised by Prof. Debarghya Mukherjee and Prof. Luis Carvalho, and I also collaborate with Prof. Nabarun Deb. Before BU, I earned my M.A. in Statistics from Columbia University and my B.S. in Mathematics from Shandong University, including a year of joint training at the Academy of Mathematics and Systems Science(AMSS), Chinese Academy of Sciences. My research sits at the intersection of statistics and machine learning, where I develop theoretically grounded transfer-learning and representation-learning methods—spanning optimal transport, graph mining, multimodal learning for structured, heterogeneous data in low-sample, high-dimensional, and non-IID settings.

The question that keeps me up (in a good way):

How can we reuse past knowledge when the world—and the data—won’t sit still?

In statistical learning, this is about transferring geometry or smoothness from a well-understood source distribution to a smaller, noisier target under shift. In reinforcement learning, the source might be prior trajectories, simulators, or related tasks, while the target is the evolving environment, so we need principled rules for what to keep, what to adapt, and what to forget. And yes! LLMs/VLMs make this even more exciting (and tricky): they already contain a lot of cross-domain knowledge, but the real challenge is extracting and specializing it safely for downstream tasks without overfitting, hallucination, or misalignment.

What I build

-

Theory that actually supports practice

Minimax rates, oracle inequalities, regret bounds, and safe-transfer criteria under covariate or structural shift. -

Graph-structured transfer methods

Aligning and transporting information across graphs/manifolds to make transfer robust when correspondence is messy or unknown. -

RL/bandits under drift

Warm-started policies with uncertainty-aware adaptation for reliable sequential decision-making in changing environments. -

Transfer principles for LLMs/VLMs

Controlled adaptation, domain grounding, and structure-preserving fine-tuning—so models adapt without getting sloppy.

Curious about my research? I put together a friendly, no-jargon slide deck on how I think about transfer learning (and why it matters)

🔥 News

- 2025.09: 🎉 My first-author paper “Transfer Learning on Edge Connecting Probability Estimation Under Graphon Model” is accepted by (NeurIPS 2025)!

- 2025.08: 🎉 My co-authored paper “Multi-scale based Cross-modal Semantic Alignment Network for Radiology Report Generation” is accepted by (IEEE SMC 2025)!

- 2025.08: 🎉 My co-authored paper “Cross-Domain Hyperspectral Image Classification via Mamba-CNN and Knowledge Distillation” is accepted by (IEEE TGRS 2025)!

📝 Publications

Leading Author

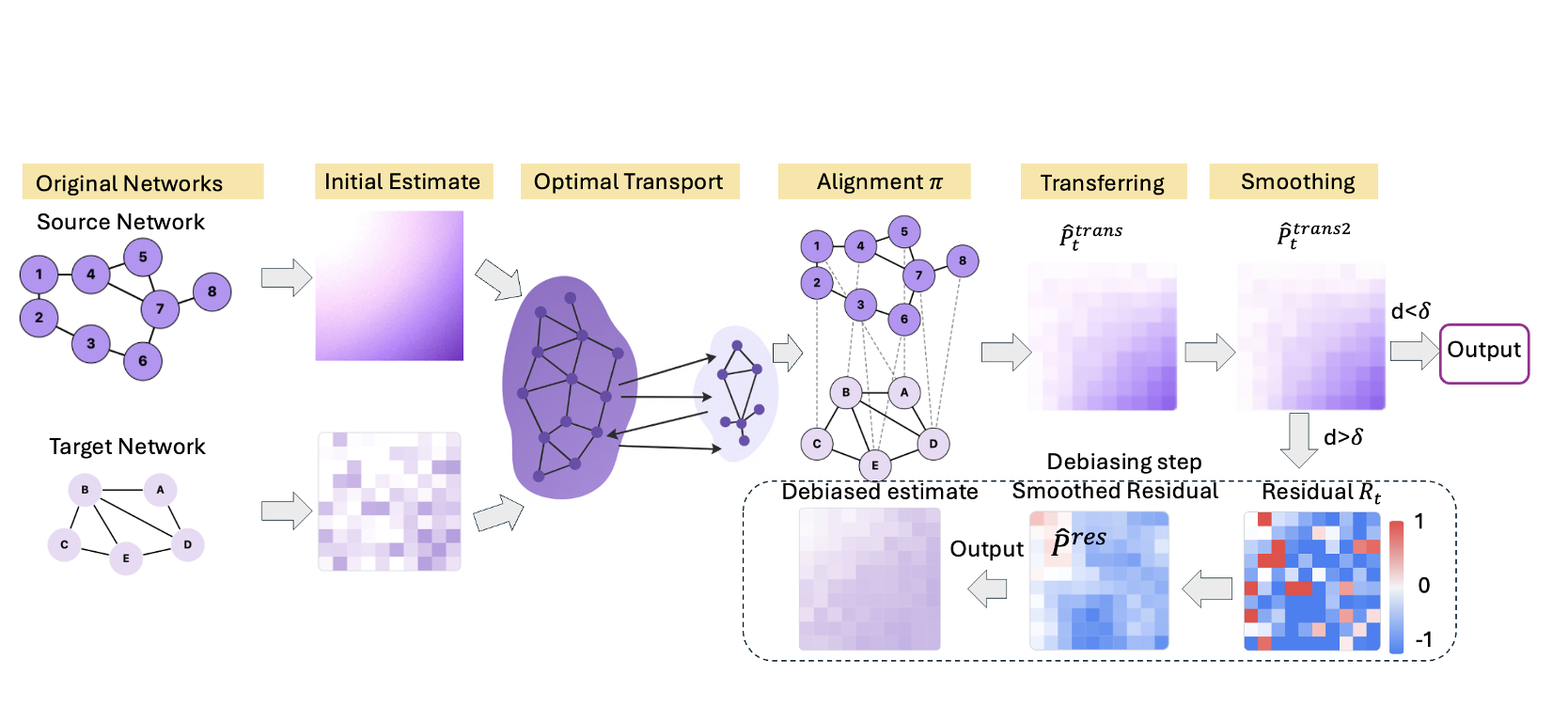

Transfer Learning on Edge Connecting Probability Estimation Under Graphon Model

· NeurIPS

· Poster

· Slides

· Code

NeurIPS 2025

- Graphon transfer without node correspondence: aligns source/target graphs via Gromov–Wasserstein, then transfers edge-probability structure in a fully nonparametric way.

- Provably stable + practically strong: residual smoothing boosts small/sparse/heterogeneous targets, with convergence & stability guarantees and SOTA gains on link prediction / graph classification.

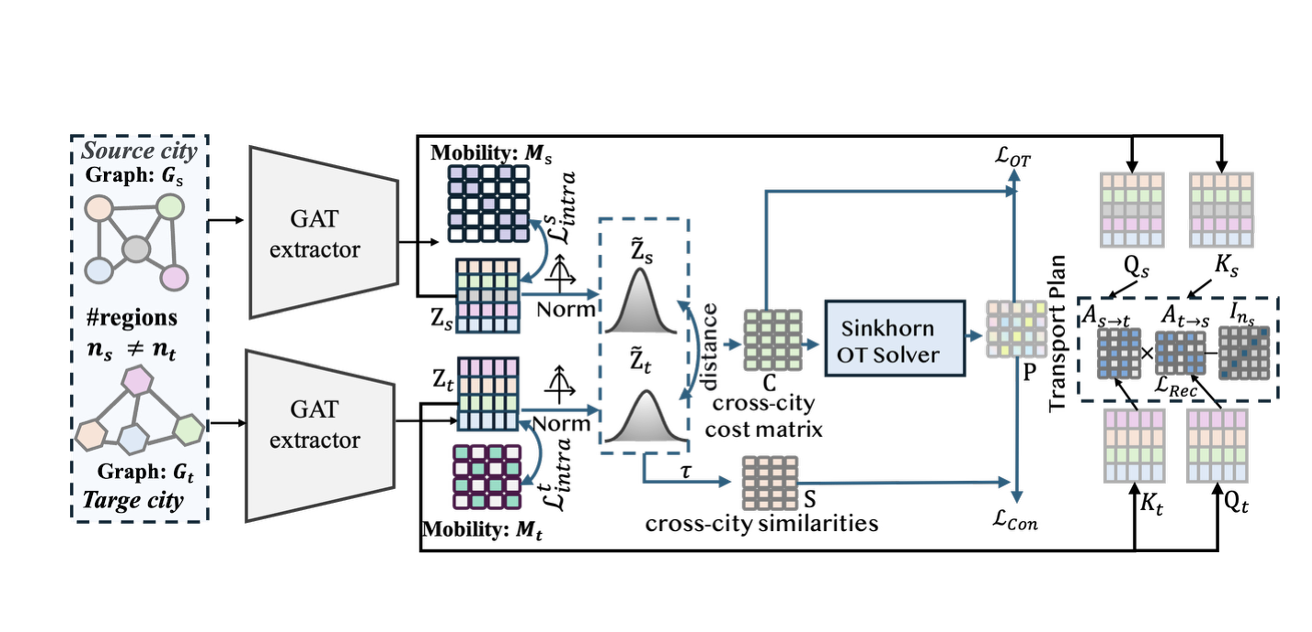

SCOT: Multi-Source Cross-City Transfer with Optimal-Transport

Soft-Correspondence Objectives

Boston University; Shandong University

- Explicit many-to-many correspondence (no node matching): learns a Sinkhorn entropic-OT coupling between unequal region sets and uses it to define soft aligned pairs for cross-city transfer.

- OT-guided semantic sharpening + stability: combines OT-weighted contrastive alignment with cycle-style reconstruction; extends to multi-source with a target-aware prototype hub to prevent collapse and handle strong heterogeneity.

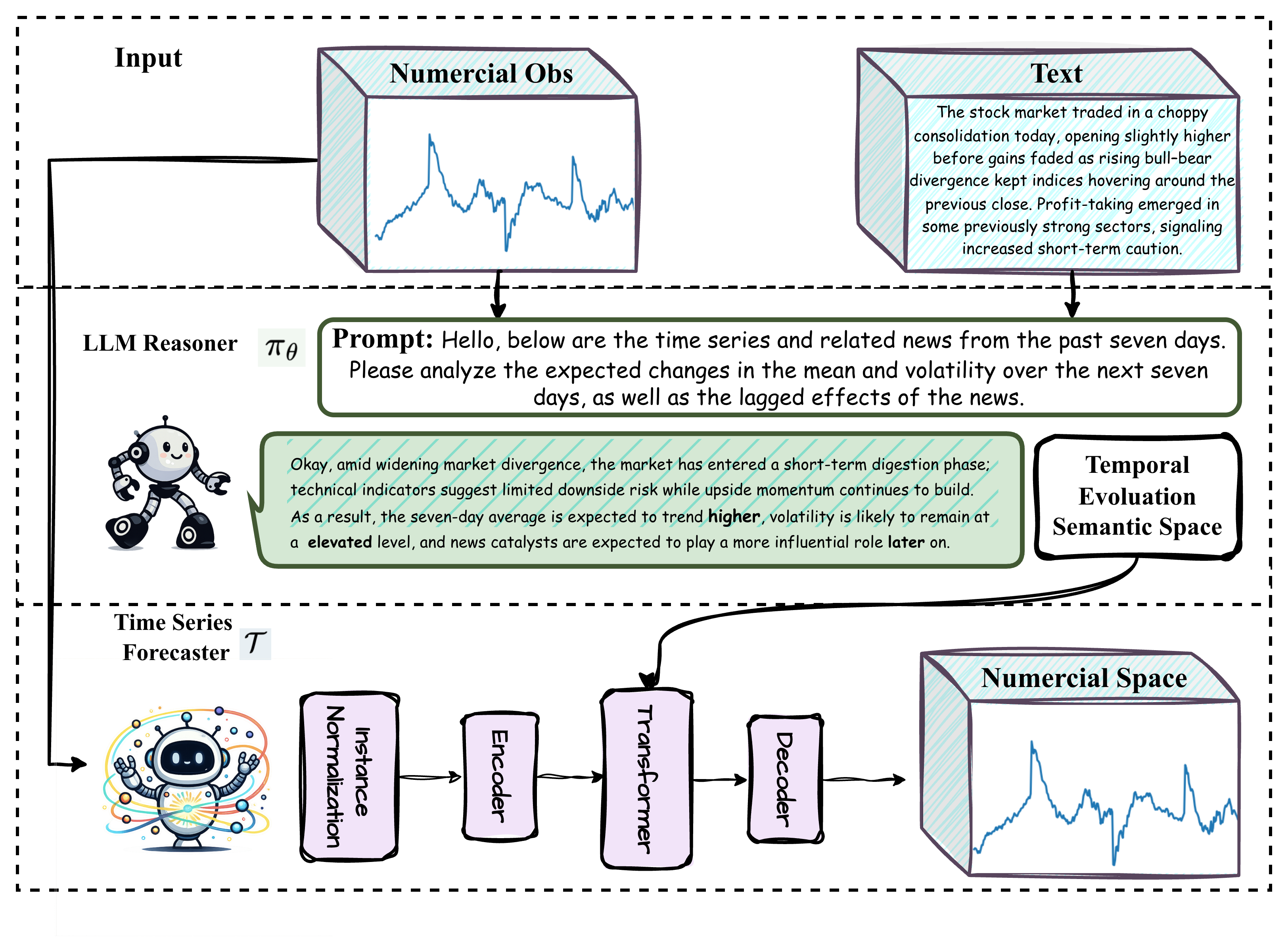

From Text to Forecasts: Bridging Modality Gap with Temporal Evolution Semantic Space

Boston University; Hong Kong University of Science and Technology; Shandong University

- Bridges the text–time-series modality gap: introduces a Temporal Evolution Semantic Space (TESS) that distills free-form text into interpretable temporal primitives (mean shift, volatility, shape, lag), instead of directly fusing noisy token embeddings.

- LLM-guided yet numerically grounded forecasting: uses structured prompting + confidence-aware gating to inject reliable semantic signals as prefix tokens into a Transformer forecaster, yielding robust gains under event-driven non-stationarity (up to 29% error reduction).

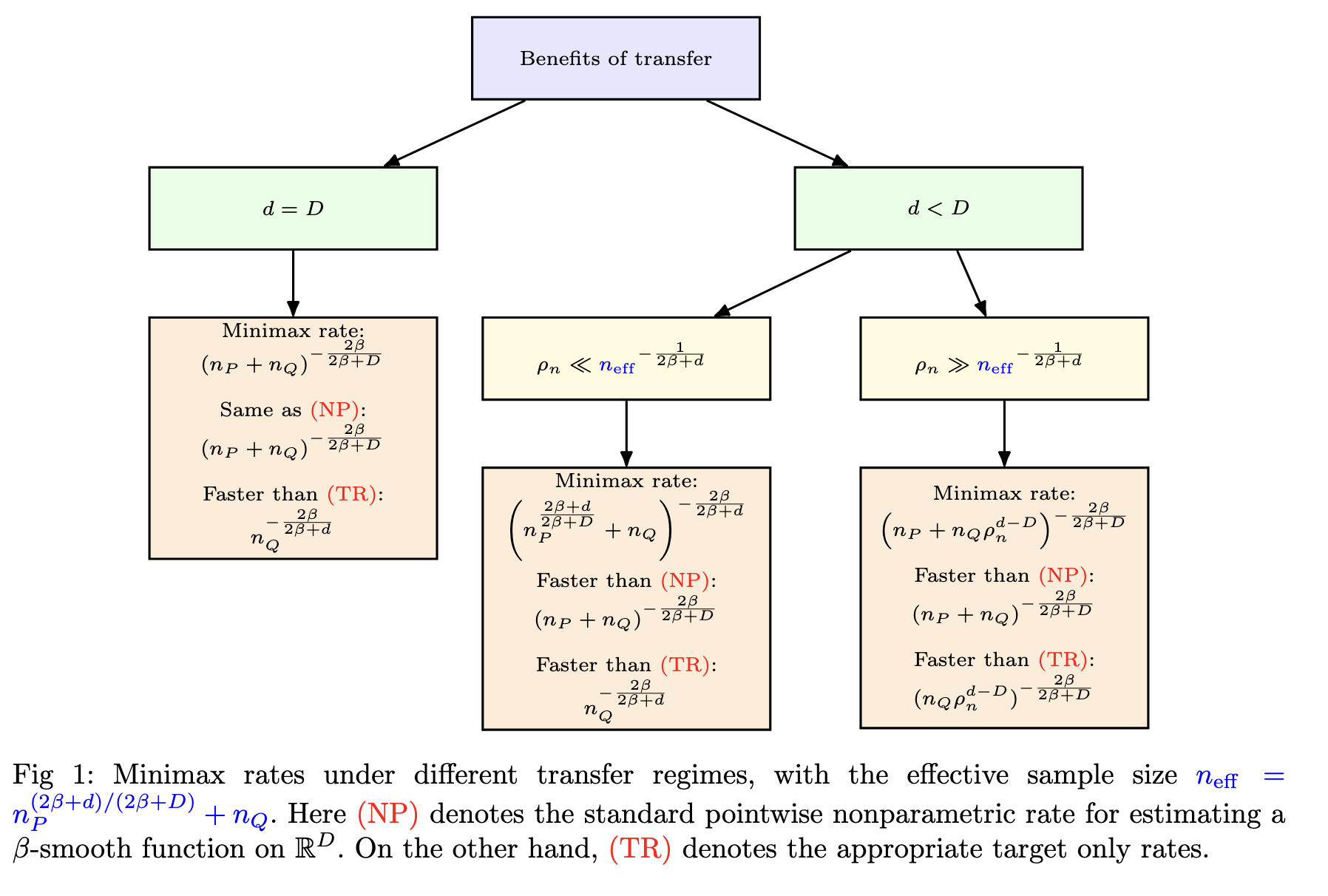

Phase Transition in Nonparametric Minimax Rates for Covariate Shifts on Approximate Manifolds

· Code

· Poster

· Slides

Boston University; Chicago Booth

- New minimax theory for “near-manifold” shift: exposes a sharp phase transition controlled by the support gap between target and source neighborhoods—unifying multiple geometric-transfer regimes.

- Ratio-free, adaptive estimator: achieves near-optimal, dimension-adaptive rates without density ratios and without assuming knowing the geometry (works under approximate manifold mismatch).

Co-author

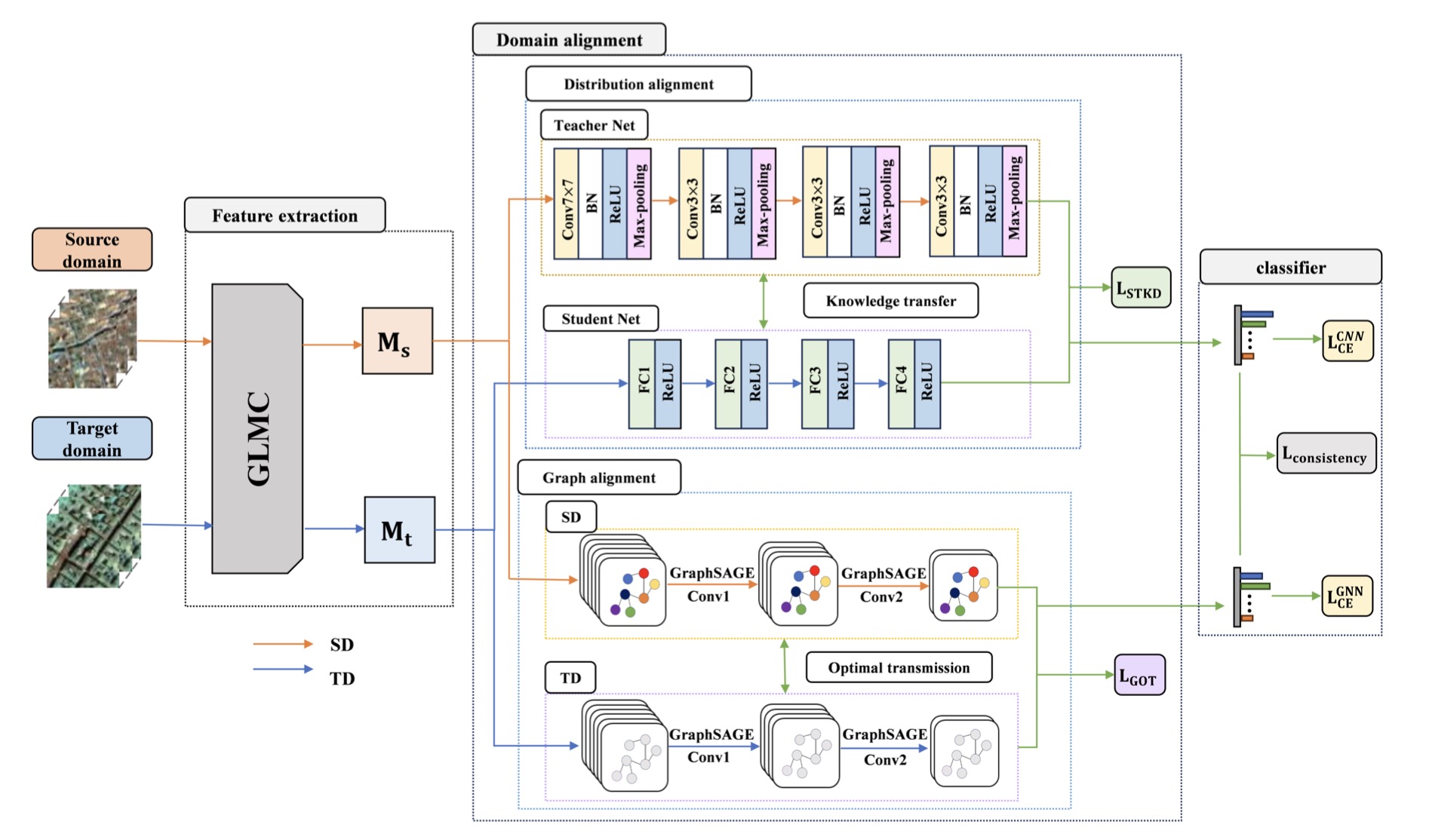

Cross-Domain Hyperspectral Image Classification via Mamba-CNN and Knowledge Distillation

· Slides

IEEE TGRS 2025

- Hybrid spectral–spatial modeling for domain shift: integrates a Mamba-based global spectral encoder with CNN local feature extraction, capturing long-range dependencies while preserving fine-grained spatial structure in hyperspectral images.

- Dual-level transfer via distillation + graph alignment: performs teacher–student knowledge distillation for distribution alignment and OT-guided graph consistency across domains, yielding robust cross-domain generalization under severe spectral mismatch.

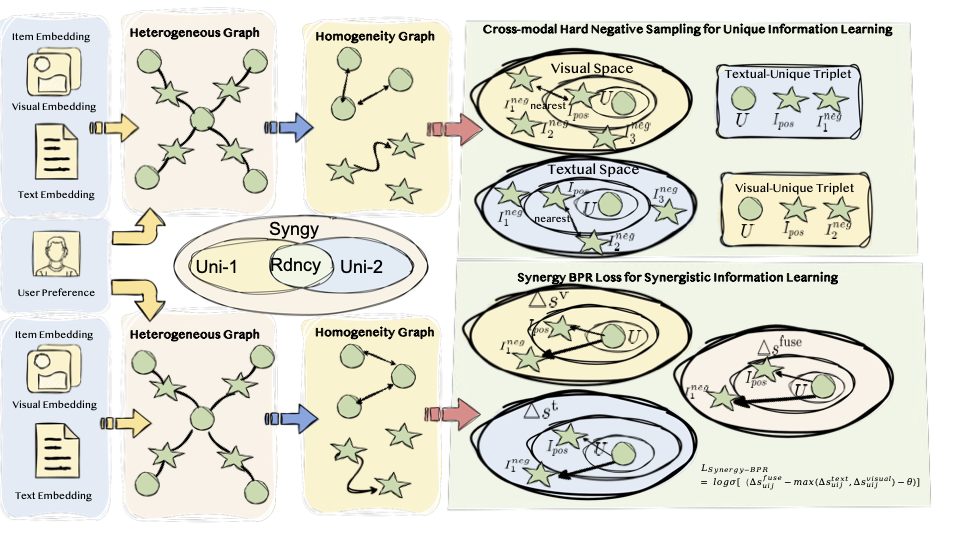

Inconsistency aware Multi Modal Recommendation

Boston University; Shandong University; Tsinghua University

- Disentangles synergy vs redundancy in multimodal signals: explicitly decomposes item information into unique (text / vision) and synergistic components using heterogeneous–homogeneity graph transformations, avoiding naïve feature fusion.

- Inconsistency-aware learning with principled negatives: introduces cross-modal hard negative sampling and a Synergy-BPR objective that suppresses redundant cues while amplifying truly complementary signals, yielding robust gains under noisy or conflicting modalities.

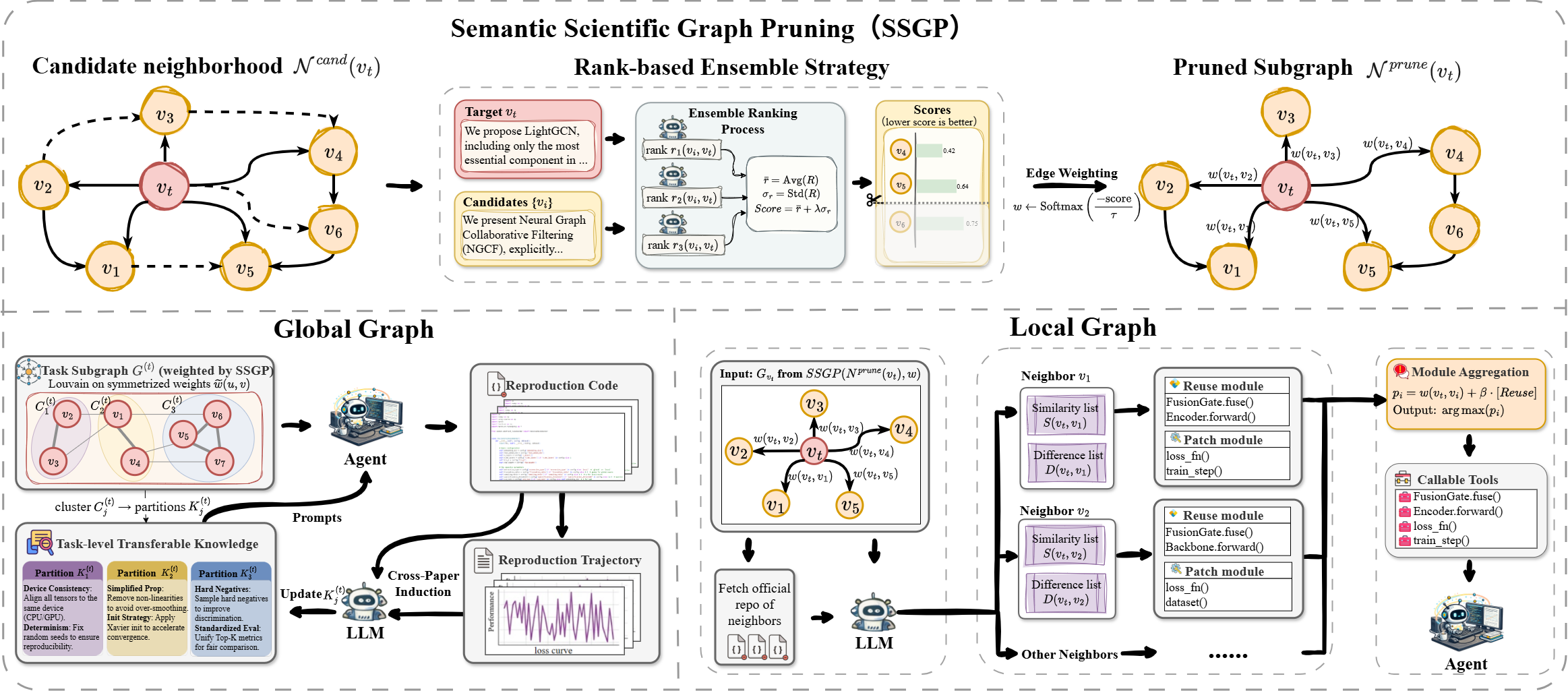

Semantic Scientific Graph Pruning for Reliable Agentic Paper Reproduction

Boston University; Shandong University

- Semantic pruning for controllable agent search: proposes Semantic Scientific Graph Pruning (SSGP) to transform dense scientific graphs into task-adaptive local subgraphs, using rank-based ensemble scoring to retain only semantically essential neighbors.

- Enables reliable agentic reproduction: couples pruned graphs with reuse–patch modular execution and confidence-weighted aggregation, dramatically reducing search space while improving reproducibility, stability, and success rate of LLM-based scientific agents.

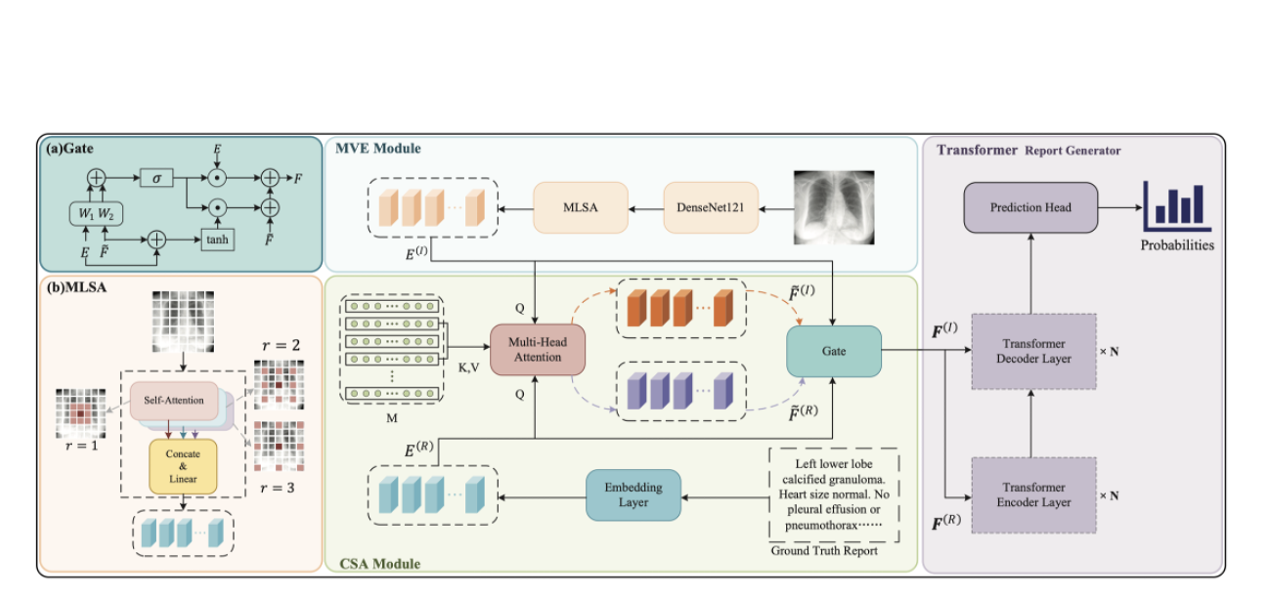

Multi-scale based Cross-modal Semantic Alignment Network for Radiology Report Generation

IEEE SMC 2025

- Multi-scale visual encoding for clinically salient abnormalities: builds hierarchical image representations that capture both global anatomy and fine-grained lesion cues, improving localization of subtle findings critical for report generation.

- Cross-modal semantic alignment with learnable fusion: enforces tight image–text correspondence via learnable cross-modal interactions (alignment-driven fusion), reducing hallucinated statements and boosting abnormality-focused sentence generation.

🎖 Honors

- 2025.09: Student Travel Grant, Boston University

- 2025.05: Ralph B. D’Agostino Endowed Fellowship, Boston University

- 2025.04: Outstanding Teaching Fellow Award, Boston University

- 2019.06: Outstanding Graduate, Shandong University

- 2018.10: Hua Loo-Keng Scholarship, Chinese Academy of Sciences

- 2018.09: First-Class Scholarship, Shandong University

- 2018.09: Outstanding Student Leader, Shandong University

📖 Educations

-

2021.09 – Now: Ph.D. in Statistics, Boston University

-

2019.09 – 2020.05: M.A. in Statistics (Data Science Track), Columbia University

-

2018.05 – 2019.06: B.S. in Mathematics, Chinese Academy of Sciences (Jointly Supervised Talent Program)

-

2015.09 – 2019.06: B.S. in Mathematics, Shandong University

💻 Internships

- 2025.05 – 2025.08: Data Scientist Intern, Plymouth Rock Insurance (Boston, MA)

- Built AWS SageMaker pipeline for property-level loss prediction; boosted Gini +4.3% using XGBoost Tweedie

- Developed LLM-powered image risk scoring with GPT-4o + Google Street View; integrated outputs into actuarial models

🗂️ Projects

- Dog Breed Classification (CNN + VGG16/ResNet50) · Code · Demo — 75.48% accuracy (Stanford Dogs); Flask deployment.

- Credit Risk Prediction (XGBoost + SMOTE) · Code — 0.976 AUC; default-class recall 91%, F1 0.95.

- Pedestrian Detection (Fast R-CNN style + Siamese) · Code — few-shot-ready pipeline + pruning/fusion for faster inference.

- Mask Detection (ResNet50 + Grad-CAM) · Code — 94% test accuracy; explainable predictions via Grad-CAM.

- LLaMA 2 Fine-Tuning (QLoRA) · Code — 4-bit QLoRA (PEFT/bitsandbytes) instruction tuning on consumer GPUs.

- LLM 1-bit Quantization (HQQ) · Code — HQQ 1-bit weight quantization on LLaMA 2; speed–accuracy benchmarks.

- RLHF (PPO) · Code — PPO-based RLHF with custom rewards for controllable generation.

- Financial Sentiment Analysis (DistilBERT) · Code · Demo — 85% accuracy; 30% faster inference.

- Spam Detection (TF-IDF + Naive Bayes) · Code — 96% precision / 94% recall; interpretable token analysis.

- Interactive Airbnb Booking Dashboard (R Shiny) · Code · Demo — interactive maps + real-time filtering.

- Bayesian Logistic Regression (RStan; Spike-and-Slab) · Code · Demo — sparse selection + full MCMC diagnostics.

- A/B Testing for Ad Targeting Optimization · Code — bootstrap CIs + power analysis; drove +15% conversion.

- Time Series Forecasting for Financial Exposure (SARIMA/ETS/Prophet) · Code · Demo — robust forecasts + residual diagnostics.

- Movie Recommendation · Code — ALS/SVD + kernel ridge refinement; accuracy ↑ 15%, compute ↓ 20%.

- Customer Segmentation · Code — elbow/silhouette-driven clustering for targeting.

- R&B Lyrical Analysis(LDA + VADER) · Code — topic discovery + sentiment trends across decades.

📃 Academic Service & Presentations

- Presentations: CIKM 2024; NeurIPS 2025

- Reviewer: CIKM 2025; ICME 2026; ICML 2026; KDD 2026

📝 Teaching Experience

- Instructor, Boston University: MA 582 Mathematical Statistics; MA 113 Elementary Statistics

- Teaching Assistant, Boston University: MA 575 Generalized Linear Models; MA 582 Mathematical Statistics; MA 415 Data Science in R; MA 214 Applied Statistics; MA 213 Statistics and Probability; MA 113 Elementary Statistics

🎨 Interests

🎵 Mandarin R&B loyalist — Leehom Wang, David Tao, Khalil Fong🦋, Dean Ting

🎹 Trained in piano, calligraphy, and ink painting

🏞️ National park lover · 🫧 lake admirer · 🌅 opacarophile — welcome to my Gallery