I’m Yuyao Wang, a Ph.D. candidate in Statistics at Boston University, co-advised by Debarghya Mukherjee and Luis Carvalho.

My research spans transfer learning, optimal transport, graph mining, nonparametric statistics, and reinforcement learning, focusing on developing theoretically grounded methods for structured and heterogeneous data in low-sample, high-dimensional, and non-IID settings.

I am driven by a fundamental question:

How can we reliably reuse past knowledge when the data-generating world keeps shifting?

In statistical learning, this means transferring geometric structure or smoothness from a well-understood source distribution to a smaller or noisier target. In reinforcement learning, the source is prior trajectories, simulators, or related tasks, while the target is the evolving environment—requiring principled rules for what to retain, what to adapt, and what to forget. In the era of LLMs and VLMs, transfer becomes even more critical: these models implicitly encode vast cross-domain knowledge, and the challenge is to extract, specialize, and safely adapt that knowledge to downstream tasks without overfitting, hallucination, or misalignment.

What I build:

- Theoretical foundations: minimax rates, oracle inequalities, regret bounds, and safe transfer criteria under covariate or structural shift.

- Graph-structured transfer methods: alignment and transport of information across graphs and manifolds.

- RL/bandit algorithms under drift: warm-started policies with uncertainty-aware adaptation for robust sequential decision-making.

- LLM/VLM transfer principles: mechanisms for controlled adaptation, domain grounding, and structure-preserving fine-tuning.

🔥 News

- 2025.09: 🎉 My first-author paper “Transfer Learning on Edge Connecting Probability Estimation Under Graphon Model” is accepted by (NeurIPS 2025)!

- 2025.08: 🎉 My co-authored paper “Cross-Domain Hyperspectral Image Classification via Mamba-CNN and Knowledge Distillation” is accepted by (IEEE TGRS 2025)!

📝 Publications

Transfer Learning on Edge Connecting Probability Estimation Under Graphon Model

· NeurIPS 2025

· Poster

· Slides

· Code

Yuyao Wang, Yu-Hung Cheng, Debarghya Mukherjee, Huimin Cheng · Boston University

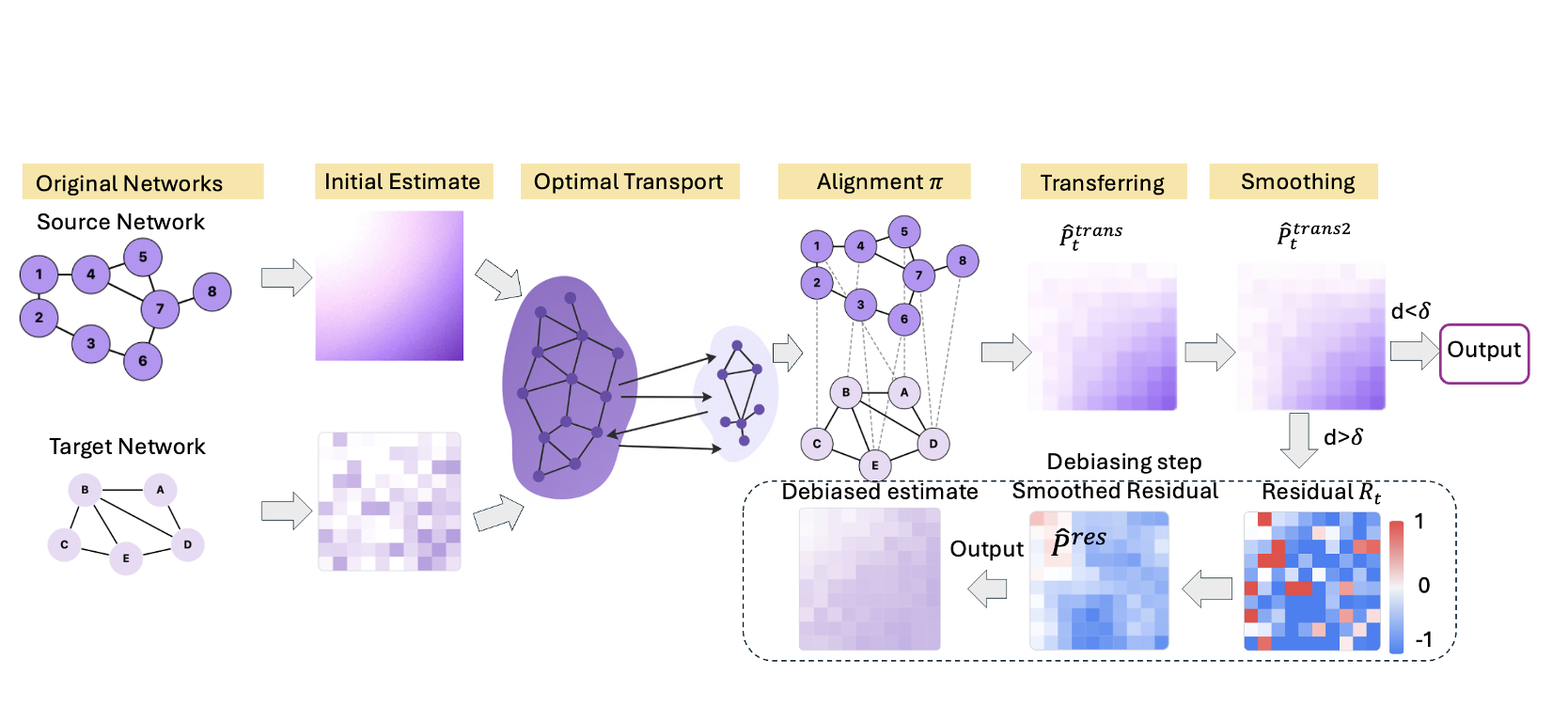

- First transfer learning framework for graphon-based edge probability estimation without node correspondence.

- Combines Gromov–Wasserstein alignment and residual smoothing with nonparametric stability & convergence guarantees.

- Achieves SOTA link prediction and graph classification, especially for small, sparse, and heterogeneous target graphs.

Phase Transition in Nonparametric Minimax Rates for Covariate Shifts on Approximate Manifolds · Code · Poster · Presentation

Yuyao Wang, Nabarun Deb, Debarghya Mukherjee

Boston University; The University of Chicago Booth School of Business

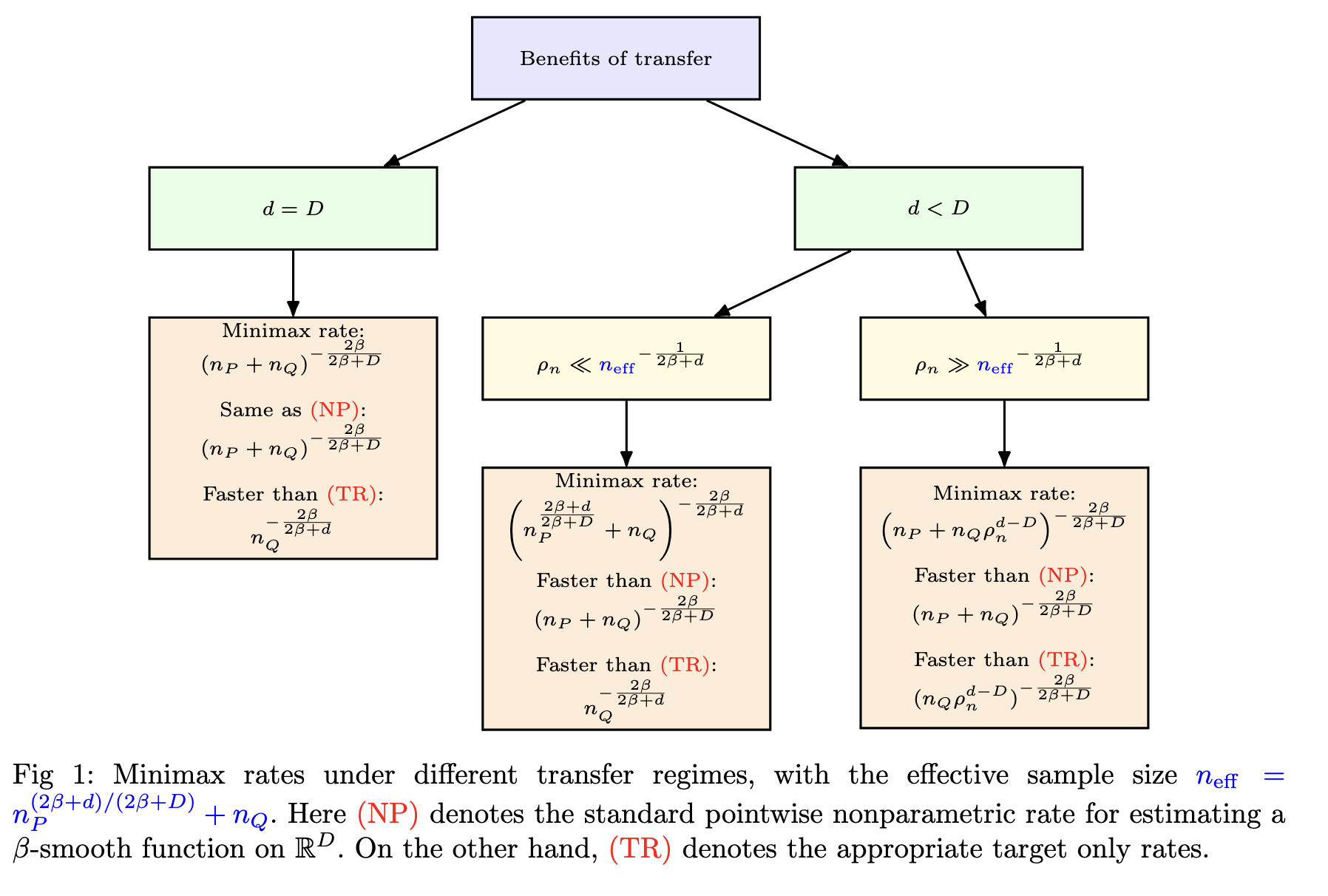

- Establishes new minimax rates for Hölder regression under covariate shift when the target lies near—but not on—the source manifold.

- Reveals a sharp phase transition: estimation difficulty is governed by the target–source support gap, unifying prior geometric-transfer regimes.

- Develops an estimator that remains valid without density ratios and achieves near-optimal, dimension-adaptive rates without geometric assumptions.

Cross-Domain Hyperspectral Image Classification via Mamba-CNN and Knowledge Distillation · Presentation

Aoyan Du, Guixin Zhao, Yuyao Wang, Aimei Dong, Guohua Lv, Yongbiao Gao, Xiangjun Dong

Shandong Computer Science Center; Boston University

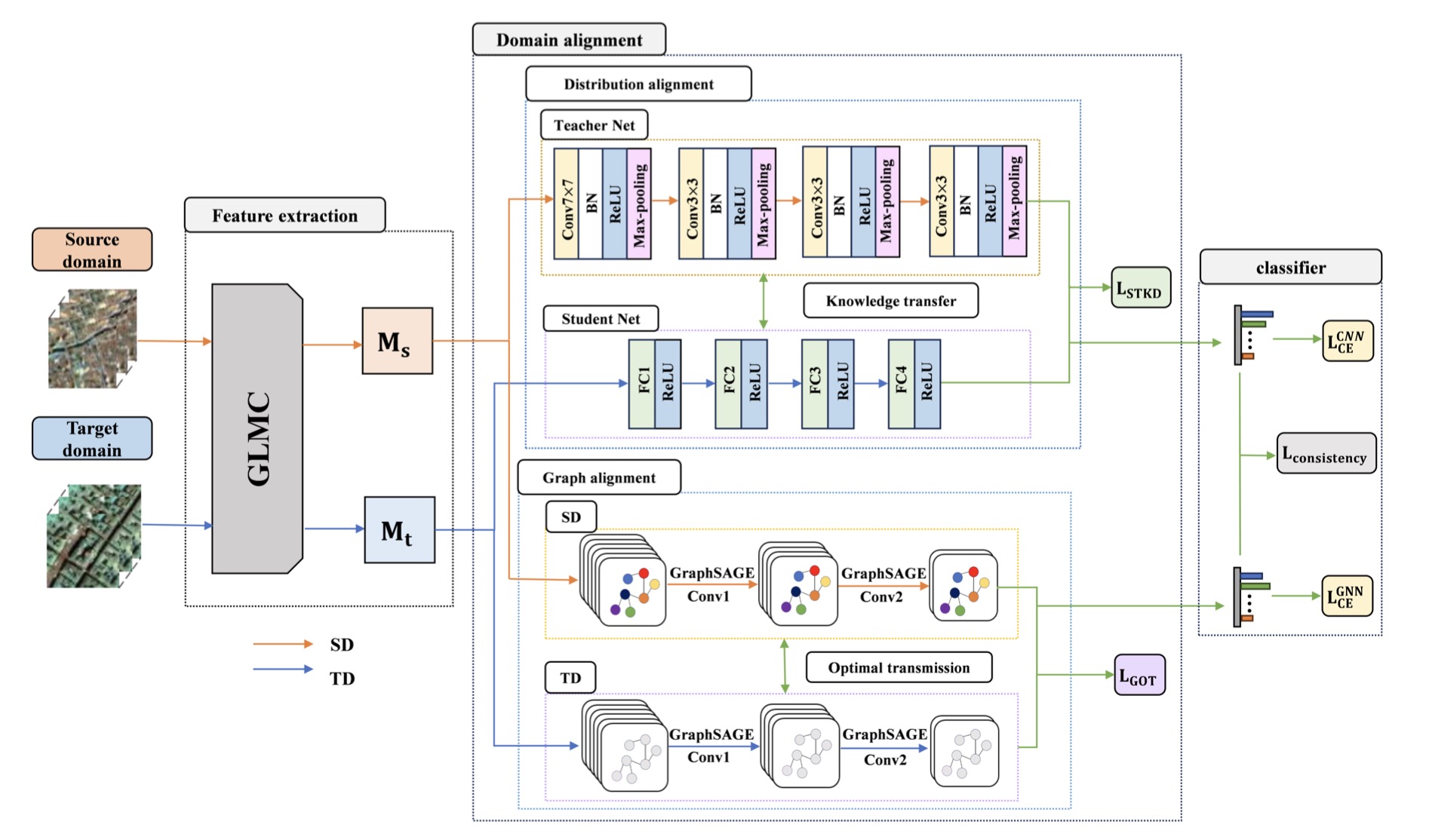

- Proposes MKDnet, a cross-domain HSI classifier integrating a Mamba–CNN hybrid encoder with knowledge distillation and OT-based graph alignment.

- Captures global context and fine local structure through dual-stream feature modeling and domain-consistent representation learning.

- Achieves state-of-the-art performance on multiple hyperspectral benchmarks under significant domain shift.

🎖 Honors and Awards

- 2025.09: Student Travel Grant, Boston University

- 2025.05: Ralph B. D’Agostino Endowed Fellowship, Boston University

- 2025.04: Outstanding Teaching Fellow Award, Boston University

- 2019.06: Outstanding Graduate, Shandong University

- 2018.10: Hua Loo-Keng Scholarship, Chinese Academy of Sciences

- 2018.09: First-Class Scholarship, Shandong University

- 2018.09: Outstanding Student Leader, Shandong University

📖 Educations

-

2021.09 – Now: Ph.D. in Statistics, Boston University

-

2019.09 – 2020.05: M.A. in Statistics (Data Science Track), Columbia University

-

2015.09 – 2019.06: B.S. in Mathematics, Shandong University

💻 Internships

- 2025.05 – 2025.08: Data Scientist Intern, Plymouth Rock Insurance (Boston, MA)

- Built AWS SageMaker pipeline for property-level loss prediction; boosted Gini +4.3% using XGBoost Tweedie

- Developed LLM-powered image risk scoring with GPT-4o + Google Street View; integrated outputs into actuarial models

🎨 Interests

🎵 Mandarin R&B loyalist — Leehom Wang, David Tao, Khalil Fong, Dean Ting

🎹 Trained in piano, calligraphy, and ink painting